Go 1.24 introduces new support for "Tools", which allows easy consumption of tools (which are written in Go) as a dependency for a project.

This could be anything from golangci-lint to protoc-gen-go.

In this post, I will cover usage and limitations.

Basic usage

Adding a tool to a project is nearly the same as a standard runtime dependency, with the additional -tool flag:

$ goimports # I don't have goimports yet!

zsh: command not found: goimports

$ go get -tool golang.org/x/tools/cmd/goimports

go: added golang.org/x/mod v0.22.0

go: added golang.org/x/sync v0.10.0

go: added golang.org/x/tools v0.29.0

$ go tool goimports --help

usage: goimports [flags] [path ...]

Once we add a tool, we can access it by go tool <name>.

There are a few other ways to use go tool, but its a pretty simple command:

- A plain

go toolwill list all tools. Note there are some built-in to Go, so you will always have a few. go tool <name>, as seen above, executes a tool.<name>can be a shortname or the full path likegolang.org/x/tools/cmd/goimports.-nwill print out the path to the tool on the filesytem:$ go tool -n goimports /go-build/45/45c850e978e426ca-d/goimports-modfile(undocumented) allows customizing whichgo.modfile to use. This will be important later.

Tools will show up in go.mod:

go 1.24.0

tool golang.org/x/tools/cmd/goimports

require (

golang.org/x/mod v0.22.0 // indirect

golang.org/x/sync v0.10.0 // indirect

golang.org/x/tools v0.29.0 // indirect

)

Overall, the new tool support is a pretty simple way to solve a common problem with a bit more first-class feel. However, it does come with some potential concerns (depending on your use case).

Issues

Slow builds

While the built-in tools (such as pprof or vet) are precompiled and distributed with Go itself, user defined tools are compiled each time they are used.

As previously discussed, Go build times vary based on the exact usage. Even a small tool will likely take a few seconds on the first usage, while a larger tool may take minutes. Subsequent execution is generally cached extremely well, but larger tools will still suffer some overhead.

Here are some examples comparing the native execution times compared to through go tool:

Benchmark 1: goimports --help

Time (mean ± σ): 1.6 ms ± 0.8 ms [User: 0.9 ms, System: 0.5 ms]

Range (min … max): 0.8 ms … 10.0 ms 1907 runs

Benchmark 2: go tool goimports --help

Time (mean ± σ): 73.1 ms ± 7.7 ms [User: 113.4 ms, System: 104.7 ms]

Range (min … max): 59.4 ms … 92.1 ms 39 runs

Summary

goimports --help ran

44.33 ± 22.50 times faster than go tool goimports --help

goimports is a pretty small tool, less than 5MB on my machine. Larger tools suffer proportionally large. trivy is a handy tool that is just under 200MB:

Benchmark 1: trivy --help

Time (mean ± σ): 56.2 ms ± 3.3 ms [User: 53.0 ms, System: 31.5 ms]

Range (min … max): 51.0 ms … 65.9 ms 44 runs

Benchmark 2: go tool trivy --help

Time (mean ± σ): 456.6 ms ± 36.9 ms [User: 2092.2 ms, System: 549.1 ms]

Range (min … max): 391.8 ms … 505.9 ms 10 runs

Summary

trivy --help ran

8.13 ± 0.81 times faster than go tool trivy --help

For infrequently invoked commands the overhead may not be a big deal, but for repeated usage the overhead may be a limiting factor.

Digging into timings

See my other post for more info on analyzing Go build times

Interestingly, Go 1.24 also comes with a compiler optimization that allows it to cache fully linked binaries.

This is used by go tool, but also the existing go run.

First question: is go tool any faster than go run?

Yes!

Benchmark 1: go tool trivy --help

Time (mean ± σ): 457.6 ms ± 32.1 ms [User: 2191.2 ms, System: 524.6 ms]

Range (min … max): 410.2 ms … 516.2 ms 10 runs

Benchmark 2: go run github.com/aquasecurity/trivy/cmd/trivy@latest --help

Time (mean ± σ): 594.4 ms ± 27.6 ms [User: 2160.3 ms, System: 592.8 ms]

Range (min … max): 562.8 ms … 634.5 ms 10 runs

Summary

go tool trivy --help ran

1.30 ± 0.11 times faster than go run github.com/aquasecurity/trivy/cmd/trivy@latest --help

The work done is almost the same, but each go run command is making network calls to resolve the module.

Given this, its fairly surprising how close the two are!

However, this is basically a fixed overhead per invocation, so smaller modules show a bigger difference: go tool is almost 3x faster for smaller tools.

Next up: why is the overhead so large, given the new caching optimizations?

The expectation is that repeat invocations should be fully cached and fast.

To investigate this, I built a custom build of go with additional diagnostics tools enabled for go tool (which is present for every command except go tool, issue).

In retrospect, I could have just analyzed

go runinvocations and ignored the module resolution. However, I didn't know they behaved essentially identically until after I did this.

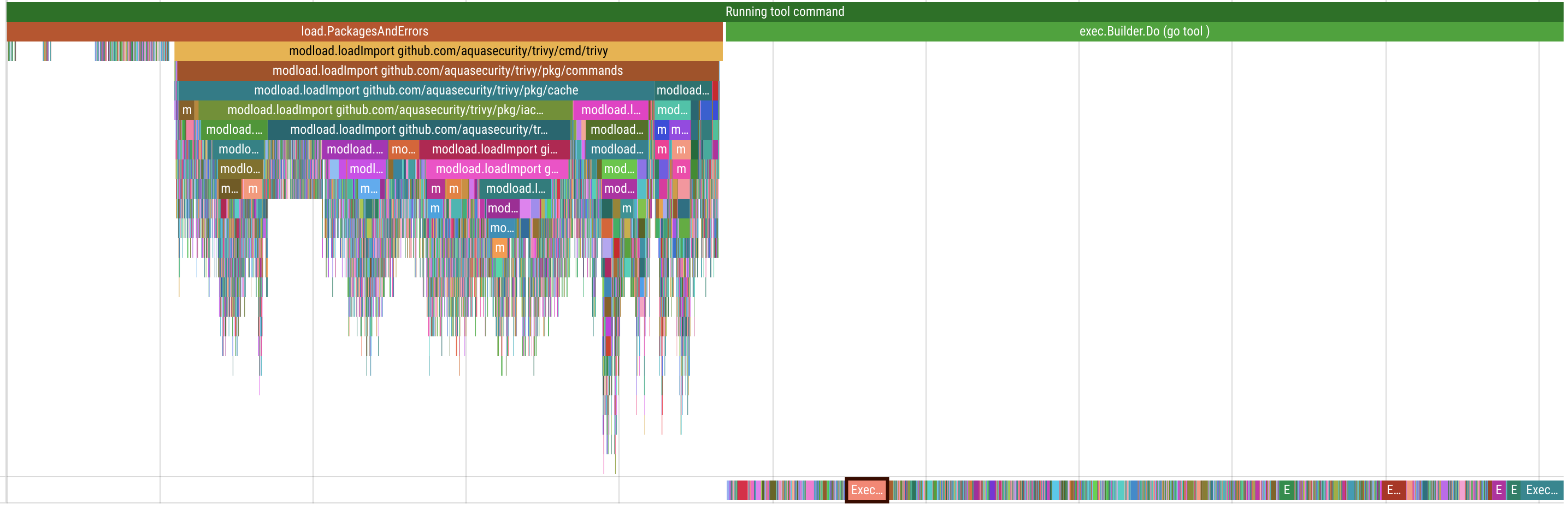

Above shows a trace of an invocation of go tool trivy.

The total runtime is 2s, with roughly 50% spend on loading the package, and 50% on "build".

So it appears that while Go does ultimately cache each step, determining there is a cache hit consumes a non-trivial amount of time.

Last question: did the caching optimization actually help?

To put this to the test, I made a small custom go binary with this disabled (as simple as commenting out CacheExecutable = true!) and compared the two:

Benchmark 1: go-without-exec-cache tool -n trivy

Time (mean ± σ): 7.168 s ± 0.080 s [User: 9.605 s, System: 1.616 s]

Range (min … max): 7.050 s … 7.343 s 10 runs

Benchmark 2: go tool -n trivy

Time (mean ± σ): 425.5 ms ± 19.8 ms [User: 2220.6 ms, System: 693.8 ms]

Range (min … max): 401.6 ms … 472.2 ms 10 runs

Summary

go tool -n trivy ran

16.85 ± 0.81 times faster than go-without-exec-cache tool -n trivy

That is a definitive yes.

Shared dependency state

Tools managed by Go end up in the go.mod/go.sum.

This can be a good or bad thing, but I tend to think it leans towards negative.

Generally, I am much more strict about dependencies in my project vs dependencies in my tools. For example, I would not like my project to imports 100s of megabytes of bloat from cloud vendor SDKs or other heavy dependencies, but a tool doing so isn't a big deal. I may not even care about a tool having a dependency with a CVE in it (depending on the details)! However, merging all the dependencies together blurs these lines and makes it harder to pick apart where each dependency is used.

Go is usually pretty good about allowing you to only pull down dependencies that are explicitly needed, but not in all cases.

For example go mod download or go mod vendor would force all of the tools dependencies as well, which can be a substantial cost -- adding a single tool to one of my projects brought the dependency size from 130MB to 170MB.

The biggest issue, in my opinion, is the shared version resolution. The main project and all tools must share a single version of each dependency. This means tools are being run with different dependencies than they were built and tested against, which may be problematic. This could lead to subtle bugs or outright build failures. Additionally, a tool requiring an older version of a dependency could force you towards certain versions of dependencies. While this is already a problem with standard dependencies, I suspect that libraries tend to be more likely to introduce issues here, while tools are less disciplined on keeping dependencies minimal, up to date, and compatible with ranges of dependency versions.

Verbose usage

While not a huge deal, all go tools must be executed via go tool <name> instead of just <name>.

Only Go tools are supported

Most projects will likely depend on some non-go tools, so a solution will still be needed for those (unless...).

Mitigations and real world usage

I've explored in the past a tooling setup that I think is ideal for a project:

- Any tools needed for developers are available to access in the repo, under something like

./bin/<tool>. Users can usePATH=./bin:$PATHto make them available "natively". - Tools are pulled on-demand on first usage and cached. They shouldn't require any/many dependencies to run.

I had used nix in the past for this, which comes with quite a few warts.

go tool offers some similar possibilities, so its worth exploring this route.

However, we will need to mitigate all 3 of the above issues.

On the projects I primarily work on, we don't follow this approach and instead use a docker image loaded with every tool we will need. This is unfortunate, as it forces docker and the image is huge since there is no incremental fetching (~6GB).

Ultimately, I came up with a strategy that looks something like below:

tools

├── tool1

├── tool2

└── source

├── tool1

│ ├── cache

│ ├── go.mod

│ └── go.sum

└── tool2

├── cache

├── go.mod

└── go.sum

Under tools/<toolname> we will have a small script that calls go tool <toolname>.

This allows us to invoke the tool with just toolname and sets up some of the next steps.

This solves the "verbose usage" problem.

Each tool will get its own unique go.mod file, ensuring we don't mangle the dependencies of other tools or the primary project.

One downside of this is that we may have different versions of the same dependency across tools, limiting caching. However, I feel this is worth the benefits.

One issue with this is that we need to be within the module to call go tool - we will solve that in the next step.

This solves the "shared dependency state" problem.

That leaves us with the "slow build" problem.

One option here is to utilize go tool -n to get the path of the binary and cache the result.

Subsequent calls can use the explicit binary path, sidestepping any overhead.

The problem is the binary location is ephemeral.

Not only could it be removed, we might need to rebuild it if versions or other aspects of the environment change.

We will solve this in the next step as well.

This solves the "slow build" problem.

Tool execution

A simple version of one of our tool scripts could look like so:

bin="$(go1.24rc2 tool -modfile=./tools/source/goimports/go.mod -n "golang.org/x/tools/cmd/goimports")"

exec "${bin}" "$@"

All of the complexity comes from our ad-hoc caching we add on top.

My approach here is to store a file that containers a map of Hash Key => Binary Path.

When we want to execute a tool, we lookup our hash key and use the existing path if its found (and still exists on the disk).

Otherwise we build and store the new key.

I am not entirely sure why Go's caching is relatively slow here.

Its possible that it isn't optimized for the tools case, where we have no local files to consider.

Or, it may consider additional environmental factors that are not relevant to our use case here.

Whatever the reason, it seems sufficient to hash the go.mod, go.sum, and go env to build our hash key.

A quick benchmark shows this taking ~5ms on my machine, so fast enough to put in our hot-path of execution.

Ultimately I put together something like so:

#!/bin/sh

set -e

WD=$(dirname "$0")

WD=$(cd "$WD"; pwd)

toolname="${1:?tool name}"

name="$(basename "$toolname")"

function hash() {

{

cat "${WD}/source/${name}"/go.*

# GOGCCFLAGS changes each time

go env | grep -v GOGCCFLAGS

} | sha256sum | cut -f1 -d' '

}

function findpath() {

local path="$1"

local key="$2"

while read line; do

fk="$(<<<"$line" cut -f1 -d=)"

if [[ "${fk}" == "${key}" ]]; then

# We found a matching hash key, print the path

<<<"$line" cut -f2 -d=

break

fi

done <"${path}"

}

pushd "${WD}/source/${name}" > /dev/null

key="$(hash)"

if [[ -f "${WD}/source/${name}.cache" ]]; then

bin="$(findpath "${WD}/source/${name}.cache" "${key}")"

if [ ! -f "${bin}" ]; then

# We found a cached entry, but it has been removed from disk

sed -i "/${key}=/d" "${WD}/source/${name}.cache"

bin=""

fi

fi

# Need to build

if [[ -z "${bin}" ]]; then

bin="$(go tool -modfile=${WD}/source/${name}/go.mod -n "${toolname}")"

# Store our entry in the cache

echo "${key}=${bin}" >> "${WD}/source/${name}.cache"

fi

# First arg was the binary to run, so shift it.

shift

exec "${bin}" "$@"

This gives the same behavior as behavior but with the additional complexity we get caching in return.

This is then executed like exec "${WD}/run-tool" "goimports" "golang.org/x/tools/cmd/goimports" "$@".

A small helper script sets the tool directory and symlink, giving us a UX like addtool golang.org/x/tools/cmd/goimports@latest.

Comparing the results, we can see our caching adds a small amount of overhead, but still dramatically better than directly using the tool:

Benchmark 1: goimports --help

Time (mean ± σ): 1.2 ms ± 0.4 ms [User: 0.7 ms, System: 0.7 ms]

Range (min … max): 0.7 ms … 3.9 ms 680 runs

Benchmark 2: ./tools/goimports --help

Time (mean ± σ): 12.5 ms ± 1.1 ms [User: 9.8 ms, System: 5.4 ms]

Range (min … max): 10.5 ms … 16.3 ms 214 runs

Benchmark 3: go tool goimports --help

Time (mean ± σ): 77.6 ms ± 13.4 ms [User: 117.8 ms, System: 106.0 ms]

Range (min … max): 60.5 ms … 121.2 ms 28 runs

Warning: Ignoring non-zero exit code.

Summary

goimports --help ran

10.21 ± 3.30 times faster than ./tools/goimports --help

63.50 ± 22.58 times faster than go tool goimports --help

For bigger tools, the difference is more pronounced; the overhead of the caching we add shouldn't scale with the size of the tool, so should be a relatively fixed overhead.

Benchmark 1: trivy --help

Time (mean ± σ): 55.7 ms ± 3.5 ms [User: 52.8 ms, System: 30.4 ms]

Range (min … max): 49.2 ms … 66.8 ms 54 runs

Benchmark 2: ./tools/trivy --help

Time (mean ± σ): 56.5 ms ± 2.5 ms [User: 65.4 ms, System: 24.7 ms]

Range (min … max): 51.2 ms … 63.5 ms 53 runs

Benchmark 3: go tool trivy --help

Time (mean ± σ): 471.2 ms ± 26.7 ms [User: 2148.9 ms, System: 550.7 ms]

Range (min … max): 431.1 ms … 519.5 ms 10 runs

Summary

trivy --help ran

1.02 ± 0.08 times faster than ./tools/trivy --help

8.47 ± 0.72 times faster than go tool trivy --help

Module sharing

In the approach presented, each tool gets it's own go.mod.

While this offers maximum isolation, its a bit of a pain.

Most tools are probably fine to use a shared dependency state (with other tools; I don't think I would ever share with the main project).

The full isolation approach ended up with over 10,000 lines of module cruft in one repo I tested it out in.

Fortunately, its pretty easy to tweak things to share the same go.mod.

The go tool -modfile=xxx can be used to pass a specific module file to be used.

We can assign any tool to any file, so we could have common-tools.mod and isolated-tool.mod for example,

if a specific tool has bad interactions with others.

Since we abstracted the go tool invocation, we can also change this transparently without users noticing.

Summary

Overall, I think the new tools support smooths out some rough edges in tool consumption. However, it is far from a perfect solution for all use cases out of the box. With some work, though, I think it can be be useful.