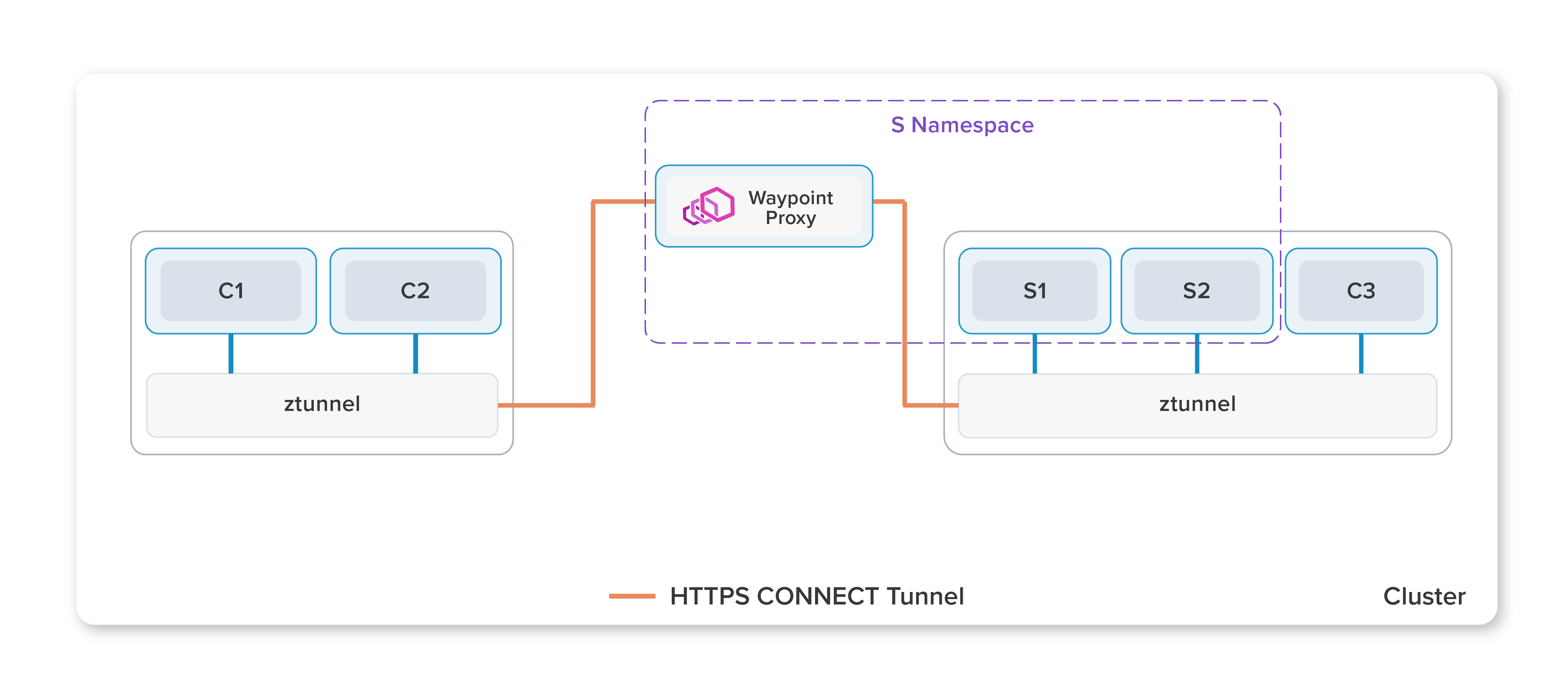

Istio "waypoint proxies" are a new architectural component introduced in Istio ambient mode last year. I suggest reading the blog for more information, but at a high level, a waypoint proxy moves the traditional service mesh sidecar proxy to a remote standalone deployment. Istio ensures that all traffic going to a pod is routed through the waypoint, ensuring policies are always enforced.

In this post, I will go over how a similar architecture can be achieved with standard Kubernetes primitives, and how this compares to using a waypoint proxy.

A basic setup.

First, our goal will be to stand up a dedicate deployment to handle HTTP functionality.

In my examples I will do a canary deployment, but this could be a number of other configurations.

I am going to do this with an Istio Gateway because it makes some latter steps easier, and I like Istio.

First we just install the Istio control plane:

$ istioctl install -y --set profile=minimal

Then we can deploy a test server. This has 2 versions running, so we can test a canary rollout. Note Istio injection isn't enabled.

apiVersion: v1

kind: Namespace

metadata:

name: server

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: echo-v1

namespace: server

labels:

app: echo-versions

spec:

selector:

matchLabels:

app: echo-versions

version: v1

template:

metadata:

labels:

app: echo-versions

version: v1

spec:

containers:

- name: echo

image: gcr.io/istio-testing/app:latest

args:

- --port=80

- --port=8080

---

apiVersion: v1

kind: Service

metadata:

name: echo-v1

namespace: server

spec:

selector:

app: echo-versions

version: v1

ports:

- name: http

port: 80

- name: http-alt

port: 8080

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: echo-v2

namespace: server

labels:

app: echo-versions

spec:

selector:

matchLabels:

app: echo-versions

version: v2

template:

metadata:

labels:

app: echo-versions

version: v2

spec:

containers:

- name: echo

image: gcr.io/istio-testing/app:latest

args:

- --port=80

- --port=8080

---

apiVersion: v1

kind: Service

metadata:

name: echo-v2

namespace: server

spec:

selector:

app: echo-versions

version: v2

ports:

- name: http

port: 80

- name: http-alt

port: 8080

---

apiVersion: v1

kind: Service

metadata:

name: echo

namespace: server

spec:

selector:

app: echo-versions

ports:

- name: http

port: 80

- name: http-alt

port: 8080

And finally a test client. This just runs a shell pod we will send requests from:

apiVersion: v1

kind: Namespace

metadata:

name: client

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: shell

namespace: client

spec:

selector:

matchLabels:

app: shell

template:

metadata:

labels:

app: shell

spec:

containers:

- name: shell

image: howardjohn/shell

args:

- /bin/sleep

- infinity

Next just make sure our setup works...

$ kubectl exec -n client deployment/shell -- curl -s echo.server

ServiceVersion=

ServicePort=80

Host=echo.server

URL=/

Method=GET

Proto=HTTP/1.1

IP=10.68.0.16

RequestHeader=Accept:*/*

RequestHeader=User-Agent:curl/8.2.1

Hostname=echo-v1-8686bf7d4-fsqt9

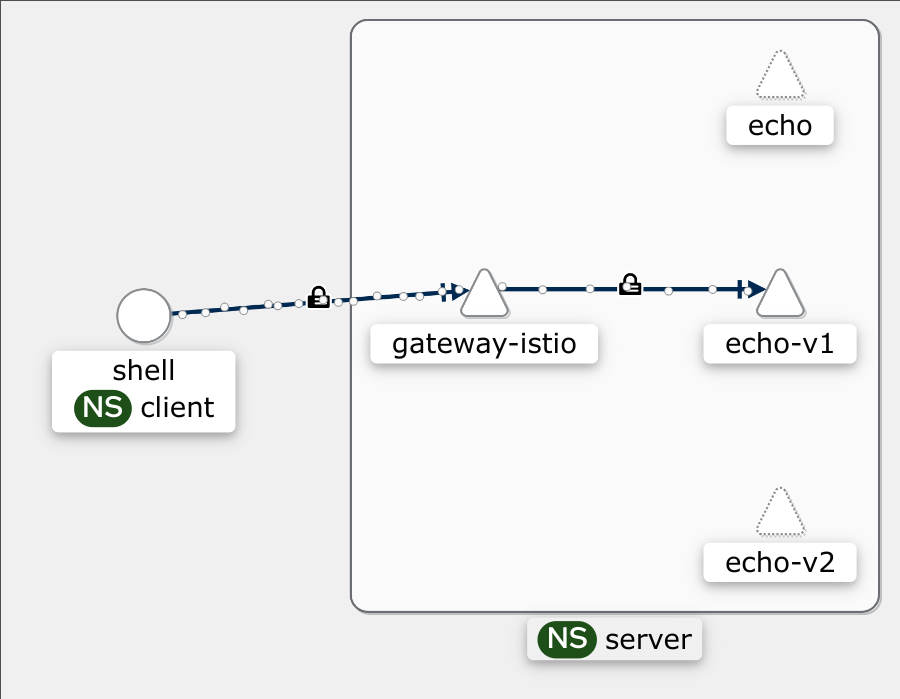

Our current architecture:

Adding a L7 proxy

Next, we want to add some canary routing (or other HTTP functionality) to our service.

To do this, we will route traffic through a gateway.

Unlike common ingress usages, we won't give this an external (LoadBalancer) IP; a ClusterIP will suffice.

This is pretty easy to add.

First, we will need the Gateway API CRDs:

$ kubectl apply -k "https://github.com/kubernetes-sigs/gateway-api/config/crd?ref=v0.8.0-rc2"

Then deploy a Gateway and HTTPRoute:

apiVersion: gateway.networking.k8s.io/v1beta1

kind: Gateway

metadata:

name: gateway

namespace: server

annotations:

networking.istio.io/service-type: ClusterIP

spec:

gatewayClassName: istio

listeners:

- name: default

port: 80

protocol: HTTP

---

apiVersion: gateway.networking.k8s.io/v1beta1

kind: HTTPRoute

metadata:

name: echo

namespace: server

spec:

hostnames: [echo.server.mesh.howardjohn.net]

parentRefs:

- name: gateway

rules:

- backendRefs:

- name: echo-v1

port: 80

weight: 1

- name: echo-v2

port: 80

weight: 2

Now we can send our request:

$ IP=$(kubectl get gtw gateway -ojsonpath='{.status.addresses[0].value}')

$ $ kubectl exec -n client deployment/shell -- curl -s $IP -H "Host: echo.server.mesh.howardjohn.net"

ServiceVersion=

ServicePort=80

Host=echo.server.mesh.howardjohn.net

URL=/

Method=GET

Proto=HTTP/1.1

IP=10.68.3.16

RequestHeader=Accept:*/*

RequestHeader=User-Agent:curl/8.2.1

RequestHeader=X-Envoy-Attempt-Count:1

RequestHeader=X-Request-Id:108e7bd6-3831-4814-bab3-8404d197f570

Hostname=echo-v1-8686bf7d4-fsqt9

$ kubectl exec -n client deployment/shell -- curl -s echo.server # Direct access still works.

This is a bit awkward to use (we will address that next), but we can see the request is now going through the gateway from a few headers that are added.

Our updated architecture:

Adding DNS

The above call is fairly awkward and hard to use, since we don't have DNS. We can manually address this, but that is no fun.

Fortunately, external-dns has us covered.

I am running on GCP, but the same can be done almost anywhere.

First we need to create a DNS zone and allow external-dns to administer it:

$ gcloud dns managed-zones create "howardjohn-net" --dns-name "howardjohn.net." \

--description "Automatically managed zone by kubernetes.io/external-dns"

$ gcloud iam service-accounts create external-dns

$ gcloud projects add-iam-policy-binding $PROJECT \

--member "serviceAccount:external-dns@$PROJECT.iam.gserviceaccount.com" \

--role roles/dns.admin

$ gcloud iam service-accounts add-iam-policy-binding external-dns@$PROJECT.iam.gserviceaccount.com \

--role roles/dns.admin

$ gcloud iam service-accounts add-iam-policy-binding external-dns@$PROJECT.iam.gserviceaccount.com \

--role "roles/iam.workloadIdentityUser" \

--member "serviceAccount:$PROJECT.svc.id.goog[external-dns/external-dns]"

Then deploy external-dns:

apiVersion: v1

kind: Namespace

metadata:

name: external-dns

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: external-dns

namespace: external-dns

annotations:

iam.gke.io/gcp-service-account: [email protected]

labels:

app.kubernetes.io/name: external-dns

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: external-dns

labels:

app.kubernetes.io/name: external-dns

rules:

- apiGroups: [""]

resources: ["namespaces"]

verbs: ["get","watch","list"]

- apiGroups: ["gateway.networking.k8s.io"]

resources: ["gateways","httproutes","grpcroutes","tlsroutes","tcproutes","udproutes"]

verbs: ["get","watch","list"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: external-dns-viewer

labels:

app.kubernetes.io/name: external-dns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: external-dns

subjects:

- kind: ServiceAccount

name: external-dns

namespace: external-dns

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: external-dns

namespace: external-dns

labels:

app.kubernetes.io/name: external-dns

spec:

strategy:

type: Recreate

selector:

matchLabels:

app.kubernetes.io/name: external-dns

template:

metadata:

labels:

app.kubernetes.io/name: external-dns

spec:

serviceAccountName: external-dns

containers:

- name: external-dns

image: registry.k8s.io/external-dns/external-dns:v0.13.5

args:

- --source=gateway-httproute

- --events

- --domain-filter=howardjohn.net # will make ExternalDNS see only the hosted zones matching provided domain, omit to process all available hosted zones

- --provider=google

- --log-format=json # google cloud logs parses severity of the "text" log format incorrectly

- --policy=upsert-only # would prevent ExternalDNS from deleting any records, omit to enable full synchronization

- --registry=txt

- --txt-owner-id=my-identifier

Finally, we can now call the DNS name:

$ kubectl exec -n client deployment/shell -- curl -s echo.server.mesh.howardjohn.net # Through gateway

$ kubectl exec -n client deployment/shell -- curl -s echo.server # Direct to pod

Now we just need to update all our clients to use the new DNS name and we are good to go.

Note: to workaround a bug in external-dns I am using a custom Istio build.

Enforcing traffic goes through the gateway

With a waypoint, we may want to apply security policies. A security policy isn't very effective if users can just bypass them by calling the Service directly.

We can mitigate this by only accepting traffic on port 80 from the Gateway itself with a NetworkPolicy.

This is generally fairly hard to do in a portable way; the Gateway could be creating a Cloud load balancer, a pod in the cluster, or anything else.

However, we can rely on some implementation details of Istio: the Gateway will deploy Pods with a wellknown label.

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: echo-enforce-waypoint

namespace: server

spec:

# For the "echo" pods...

podSelector:

matchLabels:

app: echo-versions

policyTypes:

- Ingress

# Restrict ingress

ingress:

# On port 80, traffic must come from the Gateway

- ports:

- port: 80

from:

- namespaceSelector:

matchLabels:

kubernetes.io/metadata.name: server

podSelector:

matchLabels:

istio.io/gateway-name: gateway

# For our other ports, traffic from anywhere is fine

- from:

- podSelector: {}

ports:

- port: 8080

An updated view of where we are at:

Now clients must access the workloads via the gateway at echo.server.mesh.howardjohn.net instead of directly at echo.server.

Doing it with waypoints

If we wanted to do the same setup with Istio ambient mode waypoints, the steps are a bit different.

First, we deploy the waypoint for the namespace:

$ istioctl x waypoint apply -n server

Then deploy the route:

apiVersion: gateway.networking.k8s.io/v1beta1

kind: HTTPRoute

metadata:

name: echo

namespace: server

spec:

parentRefs: # Note: we bind to the Service itself, not a Gateway

- name: echo

kind: Service

group: ""

rules:

- backendRefs:

- name: echo-v1

port: 80

weight: 1

- name: echo-v2

port: 80

weight: 2

With this, we end up with the same architecture:

What is different

Comparing the two approaches:

- Traffic target: in waypoints, the behavior of the existing Service is modified. For "DIY", an alternative DNS name/IP is used.

- Ease of setup: you decide :-).

- Protocol support: the DIY support mostly only supports HTTP and TLS passthrough; for TCP, only

portcan be used to distinguish multiple routes. This gets awkward if there are a large number of routes. For waypoints, traffic has a multi-level matching, first matching the targetServicethen matching routes for that service. This makes non-HTTP routing a bit more seamless. - Transport Encryption: with ambient mesh, the traffic between the client, waypoint, and backend will all be securely encrypted with mutual TLS (assuming both client and backend are part of the mesh). With the DIY approach, there is automatic transport encryption (manually configuring certificates on the

Gatewayand having clients usehttpscan partially solve this, of course). - Client aware policies: building on top of the Mutual TLS transport encryption, waypoints can also security identify clients based on their identity. This allows it to perform identity-aware policies, such as "only allow requests from

client". This is possible in the DIY model, but you would typically need to provision client certificate, oauth, or other complex authentication infrastructure. - Arbitrary TLS settings: in the DIY approach, we have full control over the Gateway and can do things like serve over TLS with custom certificates. Waypoints do not currently offer this flexibility.

Going further: a hybrid approach

In the manual approach, we don't really use Istio much at all - we use it as a Gateway only, which could have been any other implementation.

Can we ease some of the differences if we have more of Istio involved? Some - but not all.

First, lets re-deploy Istio with ambient mode enabled. Note this just enables the L4 node functionality -- ztunnel -- not waypoints, which are deployed on a per-namespace basis. Since we are doing "Waypoints the hard way", we will of course not actually deploy a waypoint.

$ istioctl install -y --set profile=ambient

And enable mesh for each namespace:

$ kubectl label ns server istio.io/dataplane-mode=ambient

$ kubectl label ns client istio.io/dataplane-mode=ambient

We will also want one more configuration for the Gateway; it gets confused when it is running with ambient redirection enabled. Typically it would be run without this enabled.

apiVersion: networking.istio.io/v1beta1

kind: ProxyConfig

metadata:

name: disable-hbone

namespace: server

spec:

selector:

matchLabels:

istio.io/gateway-name: gateway

environmentVariables:

ISTIO_META_ENABLE_HBONE: "false"

I also remove the NetworkPolicy I created earlier; we will replace later.

Now our original requests can be sent:

$ kubectl exec -n client deployment/shell -- curl -s echo.server.mesh.howardjohn.net # Through gateway

$ kubectl exec -n client deployment/shell -- curl -s echo.server # Direct to pod

These behave the same as before. Istio ambient transparently adds Mutual TLS encryption and authentication.

Because it is transparent it makes for a poor blog. Fortunately, ambient also enables telemetry, which can be visualized to make a nice picture showing the traffic flow and that is encrypted. This looks just like the architecture diagrams we drew above!

We can also add back an equivalent policy to our NetworkPolicy, but operating on mTLS identity:

apiVersion: security.istio.io/v1beta1

kind: AuthorizationPolicy

metadata:

name: echo-enforce-waypoint

spec:

selector:

matchLabels:

app: echo

action: ALLOW

rules:

- from:

- source:

# Allow traffic from the gateway on any port

principals: ["cluster.local/ns/server/sa/gateway-istio"]

- to:

# Other ports allow any traffic

- operation:

notPorts: ["80"]

With this, we have mitigated some of the gaps from waypoints and DIY -- now we have transport encryption.

However, we still don't have a way to act on this identity from the gateway itself.

Additionally, this would not work with a Cloud load balancer implementation.

Going further: transparent insertion

If we want to transparently make a service go through the load balancer, we don't have much choice but to intercept the traffic and redirect it. However, if we are okay with missing some (fairly important) edge cases, we can provide a fairly seamless ability by using DNS.

Kubernetes allows customizing the DNS search list. This is what makes a query like curl echo.server resolve to echo.server.svc.cluster.local.

In our case, we will override it so echo.server resolves to echo.server.mesh.howardjohn.net.

We will need the following changes

apiVersion: gateway.networking.k8s.io/v1beta1

kind: HTTPRoute

metadata:

name: echo

namespace: server

spec:

hostnames:

- echo.server.mesh.howardjohn.net

# Add the short name, as we want to match this as well.

# Note external DNS is configured to only match "*.howardjohn.net" already

- echo.server

parentRefs:

- name: gateway

rules:

- backendRefs:

- name: echo-v1

port: 80

weight: 1

- name: echo-v2

port: 80

weight: 2

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: shell

namespace: client

spec:

selector:

matchLabels:

app: shell

template:

metadata:

labels:

app: shell

spec:

containers:

- name: shell

image: howardjohn/shell

args:

- /bin/sleep

- infinity

# Add a new search to our client

dnsConfig:

searches:

- mesh.howardjohn.net

Basically just update out routes to include the short name, and configure our client pods with the new DNS configuration.

In this case, Kubernetes will append the search clause, so we also need to delete the echo Service.

If we want to prefer the load balancer, we can use a dnsPolicy that lets us replace instead of append.

Finally, we can test this out:

$ kubectl exec -n client deployment/shell -- curl -s echo.server.mesh.howardjohn.net # Through gateway

$ kubectl exec -n client deployment/shell -- curl -s echo.server # Through gateway

$ kubectl exec -n client deployment/shell -- curl -s echo.server.svc # no longer works, service removed